cross-posted from: https://programming.dev/post/37278389

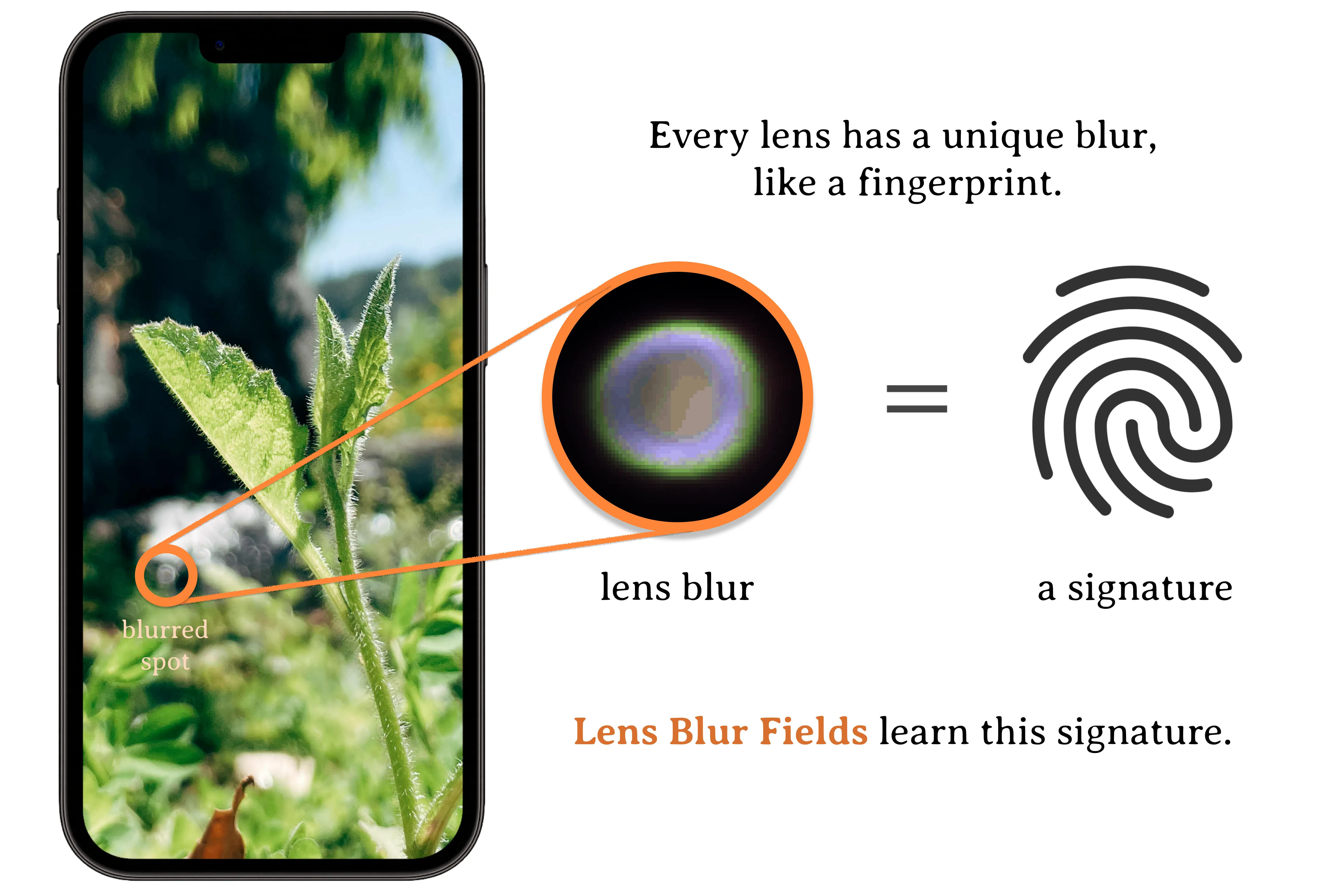

Optical blur is an inherent property of any lens system and is challenging to model in modern cameras because of their complex optical elements. To tackle this challenge, we introduce a high‑dimensional neural representation of blur—the lens blur field—and a practical method for acquisition.

The lens blur field is a multilayer perceptron (MLP) designed to (1) accurately capture variations of the lens 2‑D point spread function over image‑plane location, focus setting, and optionally depth; and (2) represent these variations parametrically as a single, sensor‑specific function. The representation models the combined effects of defocus, diffraction, aberration, and accounts for sensor features such as pixel color filters and pixel‑specific micro‑lenses.

We provide a first‑of‑its‑kind dataset of 5‑D blur fields—for smartphone cameras, camera bodies equipped with a variety of lenses, etc. Finally, we show that acquired 5‑D blur fields are expressive and accurate enough to reveal, for the first time, differences in optical behavior of smartphone devices of the same make and model.

Sanatize metadata and Exif data?

That’s probably enough to stop your online mates from doxing you, but a powerful enough adversary can trace the little unique nuanced fingerprints that a camara lens introduces to the picture, and compare it with images from other sources like social media.

There are are many steps that can introduce patterns, like the way the lens blurs as explained in the article, sensor readout noise patterns, a speckle of dust, scratches, I bet chromatic aberrations are probably also different between multiple copies of the lens.