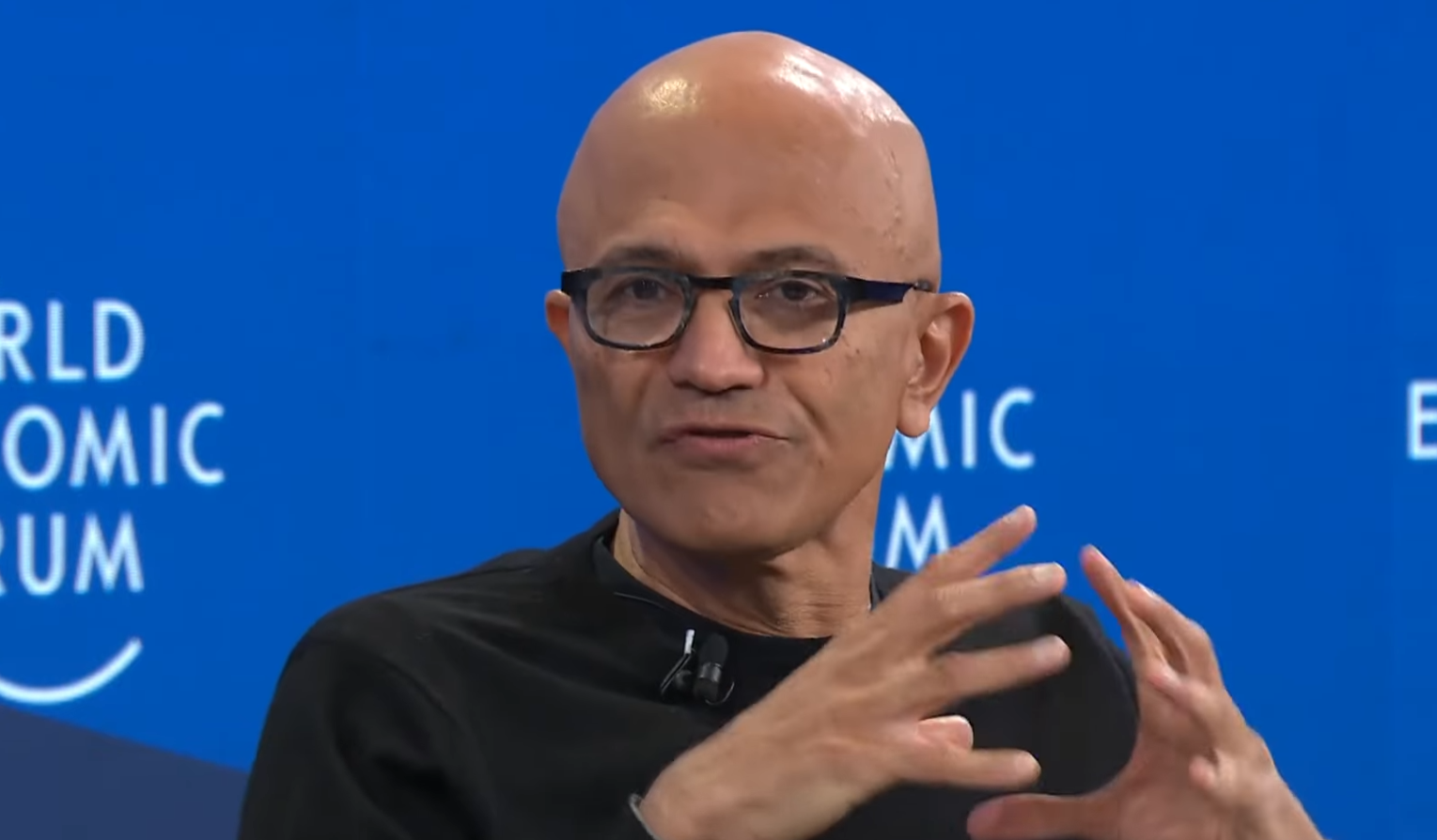

Workers should learn AI skills and companies should use it because it’s a “cognitive amplifier,” claims Satya Nadella.

in other words please help us, use our AI

So…he has something USELESS and he wants everybody to FIND a use for it before HE goes broke?

I’ll get right on it.

I was expecting something much worse but to me it deels like he’s saying “we, the people working on this stuff, need to find real use cases that actually justifies the expense” which is…pretty reasonable

Not defending him or Microsoft at all here but it sounds like normal business shit, not a CEO begging users to like their product

I mean, it would be a lot more reasonable if the entire tech industry hadn’t gone absolutely 100% all-in on investing billions and billions of dollars into the technology before realizing that they didn’t have any use cases to justify that investment.

Oh you don’t have to convince me it was a mistake, his comment just wasn’t what it’s being made out to be. “Find a use for it or we’re fucked” is a lot different than “please use our product or we’re fucked”

Idk I don’t really see much of a distinction really

I dunno, there’s a pretty big distinction. “Please use our shitty thing” vs “Please make our shitty thing better so people want to use it”

It’s placing “blame” on the industry leaders for failing to make something useful

But HE is the industry leader.

It’s not like it wasn’t him that sunk every successful product they still had in the last couple of years.

“Cognitive amplifier?” Bullshit. It demonstrably makes people who use it stupider and more prone to believing falsehoods.

I’m watching people in my industry (software development) who’ve bought into this crap forget how to code in real-time while they’re producing the shittiest garbage I’ve laid eyes on as a developer. And students who are using it in school aren’t learning, because ChatGPT is doing all their work - badly - for them. The smart ones are avoiding it like the blight on humanity that it is.

As evidence: How the fuck is a company as big as Microsoft letting their CEO keep making such embarassing public statements? How the fuck has he not been forced into more public speaking training by the board?

This is like the 4th “gaffe” of his since the start of the year!

You don’t usually need “social permission” to do something good. Mentioning that is at best, publicly stating that you think you know what’s best for society (and they don’t). I think the more direct interpretation is that you’re openly admitting you’re doing the type of thing that you should have asked permission for, but didn’t.

This is past the point of open desperation.

I’m watching people in my industry (software development) who’ve bought into this crap forget how to code in real-time while they’re producing the shittiest garbage I’ve laid eyes on as a developer.

I just spent two days fixing multiple bugs introduced by some AI made changes, the person who submitted them, a senior developer, had no idea what the code was doing, he just prompted some words into Claude and submitted it without checking if it even worked, then it was “reviewed” and blindly approved by another coworker who, in his words, “if the AI made it, then it should be alright”

I’ve been programming professionally for 25 years. Lately we’re all getting these messages from management that don’t give requirements but instead give us a heap of AI-generated code and say “just put this in.” We can see where this is going: management are convincing themselves that our jobs can be reduced to copy-pasting code generated by a machine, and the next step will be to eliminate programmers and just have these clueless managers. I think AI is robbing management of skills as well as developers. They can no longer express what they want (not that they were ever great at it): we now have to reverse-engineer the requirements from their crappy AI code.

but instead give us a heap of AI-generated code and say “just put this in.”

we now have to reverse-engineer the requirements from their crappy AI code.

It may be time for some malicious compliance.

Don’t reverse engineer anything. Do as your told and “just put this in” and deploy it. Everything will break and management will explode, but now you’ve demonstrated that they can’t just replace you with AI.

Now explain what you’ve been doing (reverse engineering to figure out their requirements), but that you’re not going to do that anymore. They need to either give you proper requirements so that you can write properly working code, or they give you AI slop and you’re just going to “put it in” without a second thought.

You’ll need your whole team on board for this to work, but what are they going to do, fire the whole team and replace them with AI? You’ll have already demonstrated that that’s not an option.

And they are all getting dependent and addicted to something that is currently almost “free” but the monetization of it all will soon come in force. Good luck having the money to keep paying for it or the capacity to handle all the advertisement it will soon start to push out. I guess the main strategy is manipulate people into getting experience with it with these 2 or 3 years basically being equivalent to a free trial and ensuring people will demand access to the tools from their employees which will pay from their pockets. When barely anyone is able to get their employers to pay for things like IDEs… Oh well.

“Social permission” is one term for it.

Most people don’t realize this is happening until it hits their electric bills. Microslop isn’t permitted to steal from us. They’re just literal thieves and it takes time for the law to catch up.

you will enjoy your chatbot that confidently tells lies while electricity bill goes up by 50% and the nearby datacentres try to make the next model not use em-dashes

As a long-time user of the em-dash I’m pissed off that my usual writing style now makes people think I used AI. I have to second-guess my own punctuation and paraphrase.

How can you lose social permission that you never had in the first place?

The peasants might light their torches

I work in AI and the only obvious profit is the ability to fire workers. Which they need to rehire after some months, but lowering wages. It is indeed a powerful tool, but tools are not driving profits. They are a cost. Unless you run a disinformation botnet, scamming websites, or porn. It is too unpredictable to really automatize software creation ( fuzzy is the term, we somehow mitigate with stochastic approach ). Probably movie industry is also cutting costs, but not sure.

AI is the way capital is trying to acquire skills cutting off the skilled.

Have to say though that having an interfacd that understands natural language opens so many possibilities. Which could really democratize access to tech, but they are so niche that they would never really drive profit.

AI is the way capital is trying to acquire skills cutting off the skilled.

They are banking on that. They have been talking about replacing humanity for decades. But what rhat means is a few select humans (I.E. them) will survive and be tended to hand and foot by AI who will also invent things for them.

They want that. We aren’t there yet… and probably never will. But that is what they want.

When the rest of us can’t afford to eat and face famine because the rich have gotten so fat off of hoarding everything, people will have no other choice but to eat the rich

The entire world order was built on crushing dissent and monitoring anyone inching to do anything. They probably let people vent by not censoring stuff online but they are probably documentating every we say. My question is not why, but how the hell can it be organized? I am honestly afraid to show up anywhere because I feel like the moment I do I will be blackbagged and disappeared.

AI isn’t at all reliable.

Worse, it has a uniform distribution of failures in the domain of seriousness of consequences - i.e. it’s just as likely to make small mistakes with miniscule consequences as major mistakes with deadly consequences - which is worse than even the most junior of professionals.

(This is why, for example, an LLM can advise a person with suicidal ideas to kill themselves)

Then on top of this, it will simply not learn: if it makes a major deadly mistake today and you try to correct it, it’s just as likely to make a major deadly mistake tomorrow as it would be if you didn’t try to correct it. Even if you have access to actually adjust the model itself, correcting one kind of mistake just moves the problem around and is akin to trying to stop the tide on a beach with a sand wall - the only way to succeed is to have a sand wall for the whole beach, by which point it’s in practice not a beach anymore.

You can compensate for this by having human oversight on the AI, but at that point you’re just back to having to pay humans for the work being done, so now instead of having to the cost of a human to do the work, you have the cost of the AI to do the work + the cost of the human to check the work of the AI and the human has to check the entirety of the work just to make sure since problems can pop-up anywere, take and form and, worse, unlike a human the AI work is not consistent so errors are unpredictable, plus the AI will never improve and it will never include the kinds of improvements that humans doing the same work will over time discover in order to make later work or other elements of the work be easier to do (i.e. how increase experience means you learn to do little things to make your work and even the work of others easier).

This seriously limits the use of AI to things were the consequences of failure can never be very bad (and if you also include businesses, “not very bad” includes things like “not significantly damage client relations” which is much broader than merely “not be life threathening”, which is why, for example, Lawyers using AI to produce legal documents are getting into trouble as the AI quotes made up precedents), so mostly entertainment and situations were the AI alerts humans for a potential situation found within a massive dataset and if the AI fails to spot it, it’s alright and if the AI incorrectly spots something that isn’t there the subsequent human validation can dismiss it as a false positive (so for example, face recognition in video streams for the purpose of general surveillance, were humans watching those video streams are just or more likely to miss it and an AI alert just results in a human checking it, or scientific research were one tries to find unknown relations in massive datasets)

So AI is a nice new technological tool in a big toolbox, not a technological and business revolution justifying the stock market valuations around it, investment money sunk into it or the huge amount of resources (such as electricity) used by it.

Specifically for Microsoft, there doesn’t really seem to be any area were MS’ core business value for customers gains from adding AI, in which case this “AI everywhere” strategy in Microsoft is an incredibly shit business choice that just burns money and damages brand value.

Its funny because this is an admission that hes not actually done anything interesting which is a complete pivot from the last two years of him screaming about how good AI is for the last 2 years.

Honestly, this is the most reasonable take I have heard from tech bros on ai so far… Use it for something useful and stop using it for garbage!

Ai has a million great uses that could make so many things so much easier, but instead we are building AI to undress women on twitter

“we” aren’t doing that. The tech bros are.

Did they ever have social permission in the first place?

Literally burning the planet with power demand from data centers but not even knowing what it could possibly be good for?

That’s eco-terrorism for lack of a better word.

Fuck you.

The whole point of “AI” is to take humans OUT of the equation, so the rich don’t have to employ us and pay us. Why would we want to be a part of THAT?

AI data centers are also sucking up all the high quality GDDR5 ram on the market, making everything that relies on that ram ridiculously expensive. I can’t wait for this fad to be over.

Not to mention the water depletion and electricity costs that the people who live near AI data centers have to deal with, because tech companies can’t be expected to be responsible for their own usage.

The oligarch class is again showing why we need to upset their cart.

- Denial

- Anger

- Bargaining <- They’re here

- Depression

- Acceptance

Denial: “AI will be huge and change everything!”

Anger: “noooo stop calling it slop its gonna be great!”

Bargaining: “please use AI, we spent do much money on it!”

Depression: companies losing money and dying (hopefully)

Acceptance: everyone gives up on it (hopefully)

Acceptance: It will be reduced to what it does well and priced high enough so it doesn’t compete with equivalent human output. Tons of useless hardware will flood the market, china will buy it back and make cheap video cards from the used memory.

The five stages of corporate grief:

- lies

- venture capital

- marketing

- circular monetization

- private equity sale

Translation: Microslop’s executives are finally starting to realize that they fucked up.

“Microsoft thinks it has social permission to burn the planet for profit” is all I’m hearing.

Probably in the Hobbes sense that they’re not actively revolting