I recently learned a new way of understanding what “turning the other cheek” is. Rather than a passive acceptance of abuse, it’s like putting a mirror on the abuser and making them look like the villains (which they actually are). The post is very much alike.

The Cheek Slap in Jesus’ Day

In Jesus’ day, hitting a person on the cheek was a forceful insult, but it was not considered a violent assault. Here, Jesus is specifying a strike on the right cheek, which implies a back-handed slap. Striking someone with the back of the hand (3) could demand a doubled fine because it was “the severest public affront to a person’s dignity.” (4)

But Jesus is not suggesting that his followers should stand around and take abuse. First, turning the left cheek was a bold rejection of the insult itself. Second, it challenged the aggressor to repeat the offense, while requiring that they now strike with the palm of their hand, something done not to a lesser but to an equal. In other words, turning the other cheek strongly declares that the opposer holds no power for condescending shame because the victim’s honor is not dependent on human approval—it comes from somewhere else. (5) This kind of action reshapes the relationship, pushing the adversary to either back down or to treat them as an equal.

Source: https://bibleproject.com/articles/what-jesus-meant-turn-other-cheek-matthew-539/

This is The first time i understood what was meant by this part of the bible.

Yeah this makes a lot more sense than the typical interpretation of, “This is fine, hit me some more. I can take it til you tire of being an asshole.”

Atheist here. Always understood it as enduring abuse where unavoidable and not escalating it. It’s not fair but it ends the cycle of abuse assuming you can take it and not be phased too much by it.

What if the aggressor would also back-hand strike the second time, using his left hand now?

In many parts of the world, it’s not uncommon for hands to be the go-to utensils for enjoying a meal. For most of human history, hands have been the primary tool for most things, food consumption included. Today, the tradition is governed by cultural etiquette rules in much of India, Africa, and the Middle East – the birthplaces of human civilization. In majority-Muslim countries, Islamic doctrine dictates that food should be consumed with the right hand, a decree by the Prophet Muhammad that can be found in the Qur’an. This is one of the reasons why in most Middle Eastern countries, the left and right hands have distinct purposes when it comes to activities involving cleanliness and consumption.

Custom dictates that the left hand should be reserved for bodily hygiene purposes, or “unclean” activities, while the right hand is favored for eating, greeting, and other such “clean” activities. It’s best practice to default to the right hand for gift-giving, handing over money, or greeting another person – while the left is primarily for cleaning oneself. For this reason, using the left hand to eat or shake someone’s hand is considered not only unhygienic but potentially insulting as well.

Read More: https://www.foodrepublic.com/1573656/why-left-handed-eating-frowned-upon-middle-east/

Seems like this proposed Jesus theory doesn’t equalize the situation as much as give your opponent a chance to quadruple up on the insult by slapping you with their medieval toilet brush. Not sure I buy that interpretation as nice as it sounds.

How fitting the image in the post lacks alt text. 🤦

The image has alt text. Maybe your client doesn’t support it though.

That’s interesting. At my instance & the community’s, I’m seeing

<img src="https://sopuli.xyz/pictrs/image/c274526d-0ee6-4954-9897-100010dc5571.webp" alt="" title="" loading="lazy">attribute

altentirely blank. Yet in yours & the author’s, the attribute is filled. Seems they run later versions of lemmy with better accessibility: added alt_text for image posts introduced in version 0.19.4.I stand corrected: instances get different accessibility experiences. 😞

This feels like an apt microcosm of a lot of accessibility issues — how even when people do what they can to make things accessible (such as adding alt text), fragmentation and complexity leads to an unequal distribution of accessibility. Standardisation can help, but I’ve also seen projects that lose sight of ultimate aims (such as but not limited to greater accessibility) when they treat standardisation of protocols etc as a goal in and of itself. When it gets to that point, I feel like we’re more likely to see a proliferation of standards rather than a consolidation. It gets messy, is my point.

I find it super interesting as someone who has a few different (and sometimes competing) access needs, because some of the most upsetting times that I’ve faced inaccessible circumstances have been where there was no-one at fault.

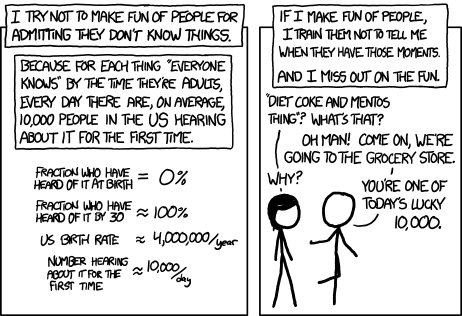

Just realized I’ve seen the push multiple times to include alt text, but not guidance on how.

Is there an actual etiquette to follow or even a specific format for alt text? Or just a sentence describing the image and call it a day?

Maybe this blog post is of use. Good Alt Text, Bad Alt Text — Making Your Content Perceivable.

If you don’t feel like reading the entire post you can skip to “Writing good alt text — Context is key”.

The Web Accessibility Initiative has tons of content. For images, you can start from their tips to get started & tutorials that links to.

Here specifically, you can learn how to set alt text in markdown.

Ok, that’s helpful for providing alt text for images inside the body of a post.

Bus what about image posts? When using the web interface I don’t see any opportunity for entering the alt text. 🤔

When using the web interface I don’t see any opportunity for entering the alt text.

Apparently, some instances offer it: you might want to ask your instance administrator to upgrade. If yours doesn’t offer it, the text alternative can be adjacent as stated in the success criterion for non-text content

All non-text content that is presented to the user has a text alternative that serves the equivalent purpose

and the techniques for it identified with prefix G. The

altattribute is merely 1 way to accomplish that.When it’s a screenshot of a webpage, a link to the source often makes sense as a text alternative. I see way too many images that could be blockquotes & links, which are often superior to an image: more accessible & more useful to everyone else. That’s often the case of good accessibility: it benefits everyone else.

I tend to explain what it is, and what is the important part. Thinking about it from the perspective of what someone might need to know while also respecting their time. I think “Screen shot of Lemmy post feed with nav bar at top, first post says “blah”, second post says “blah blah”, third post says…”, I think that’s too much unnecessary detail. So I’d do something like “Screen shot of Lemmy post feed, showing the third post called “Blah blah” has a green highlight over the first word” or whatever the message is I’m trying to get across with the screenshot.

I don’t know if there is etiquette or a specific format but I would write as much as is needed to convey the reason you’re including the image (whether that is a sentence or 100 words), striking a balance between making sure someone who is relying on the alt text can understand everything they need to know while also respecting their time.

I found that even when you can see the image, alt-text often helps significantly with understanding it. e.g. by calling a character or place by name or saying what kind of action is being done.

deleted by creator

I would gladly train a million AI robots just to make the web a lot cooler for those visual impairment.

The AI ship has already sailed. No need to harm real humans because somebody might train an AI on your data.

Honestly I think that sort of training is largely already over. The datasets already exist (have for over a decade now), and are largely self-training at this point. Any training on new images is going to be done by looking at captions under news images, or through crawling videos with voiceovers. I don’t think this is a going concern anymore.

And, incidentally, that kind of dataset just isn’t very valuable to AI companies. Most of the use they’re going to get is in being able to create accessible image descriptions for visually-disabled people anyway; they don’t really have a lot more value for generative diffusion models beyond the image itself, since the aforementioned image description models are so good.

In short, I really strongly believe that this isn’t a reason to not alt-text your images.

Maybe the AI can alt text it for us.

It sort of can. Firefox is using a small language model to do just that, in one of the more useful accessibility implementations of machine learning. But it’s never going to be capable of the context that human alt text, from the uploader, can give.

True, but I was thinking maybe something in the crate post flow(maybe running client side so as not to overload the lemmy servers 😅) that generates a description that the uploader can edit before(and after) they post it, that way it’s more effort for the poster to not add it than to add it, and if it’s incorrect people will usually post comments to correct it. Maybe also adding a note at the end that its ai generated unless the user edits it.

But that’s probably way too complicated for all the different lemmy clients to be feasible to implement tbh.

I think that would make a great browser extension. I’m not in a position to make it right now, but wow, that could potentially be really useful.

AI training data mostly comes from giving exploited Kenyans PTSD, alt-text becoming a common thing on social media came quite a bit after these AI models got their start.

Just be sure not to specify how many fingers, or thumbs, or toes, or that the two shown are opposites L/R. Nor anything about how clown faces are designed.

What do you think is creating all those descriptions?

It’s been great on pixelfed, I appreciate the people that put some time into it

That’s amazing. I’d love to hear from one of the audience about how they found the experience.

You’d love to hear?

Fuck, I laughed, I’m going to hell… Take your upvote

And to alt-text an embedded image in markdown:

Now this I did not know. Every day’s a school day.

I brought mom’s brownies for recess.

I accidentally brought the brownies from dads house. We should probably eat these first.

I didn’t know you could do that! I’ll try it, let me know if it works.

FYI alt text only applies when the image fails to load. You can get hover text by adding quoted text after the url.

Preview

Alt text is read by screen readers even if the image loads.

Ok, I can’t imagine blind people able to use a mouse very well. So I also imagine they have a brail keyboard. But does that mean they are set up with speakers and a keyboard and they learn how to navigate a computer really slowly, and that modern webpages are very… Noisy?

You can try the built-in screen reader/accessibility mode in your OS and blow your mind.

Neat. For me on mobile, the hover text takes precedence but they both work.

Voyager doesn’t seem to use the hover text at all. I think it should though, might make a post about it in their community.

Markdown features are extremely fragmented. Hover text might be a non-standard feature that not all markdown renderers can handle (or even a standard feature that’s omitted in some renderers).

That’s interesting. I’m on the default site ui for reference.

I am using Thunder, if I tap the image for both of yours (to make full screen) I can see the alt text on both. Not while I am in the post though, scrolling through replies.

I may have to tap on more images from here on. Thanks to you both for the info and examples!

On Eternity, I can see the alt-text when I long-press on the image.

it does.

Oh shit that’s actually really useful, thank you!

As a video engineer on events, I always love having to accomodate live captioning and signers.

It means more layers on the screen (IE picture in picture), more chance to make things look good, and it means the production company / client / organiser has actually thought about their event.I always enjoy gigs with wheelchair accessible stages, captioning, hearing loops, and signers are good gigs.

Is this a mute/deaf person giving a talk, or a talking/hearing person being incredibly based?

My assumption is the latter, which is awesome.

Given the username, I’d say that is the case: https://terptheatre.org/

"TerpTheatre is both a source of information about theatre interpreting and a tradition of theatre interpreters in the shadowed strategy. "

Are there any blind people on Lemmy, screenreading this? I get why alt-text is useful functionally on things like application interfaces, and instructive or educational text, but do you actually enjoy hearing a screen reader say "A meme of four oanels. First panel. An image of a young man in a field. He is Anakin Skywalker as played by that guy who played Anakin Skywalker in the Star Wars prequels. He says ‘bla bla bla’. Next frame. An image of a young woman. She is Padme as played by Natalie Portman. She is smiling. She says “bla bla bla, right?”

I personally know many blind people on the fediverse, yes.

the question is do you need at least one blind person to justify alt text or do you want the alt text to make it possible for blind people or people with impaired vision to enjoy if they ever stumble upon it?

This! I often hear certain restaurants don’t have ramps because disabled people don’t eat there. And we don’t because there’s no ramp.

I think they simply dont want to waste their time. Its a legitimate question, comes up with handicapped regulations on physical businesses too, although that usually costs money and time.

oh what an inconvenience… I wish I was blind so I didn’t have to spend 4 more minutes transcribing 20 words on a meme.

even if you think it’s a waste of time, I didn’t even comment on the validity of the question. I just gave them the actual consideration they should have.

oh and even if you completely ignore accessibility on that front, transcribing images makes them infinitely more searchable. no one knows what a title would be, people usually don’t put anything helpful or something you can remember. but if you know some of the words you might be able to find it.

it’s like finding songs by searching lyrics from a random part of the song you heard or remember. it would be so much harder if you had to know the title.

There’s also the element of wasting the blind person’s time. I work in enterprise software and our application meets WCAG guidelines but… it’s a busy, text-heavy, actions-heavy application. It can take 5 minutes for a screen reader to read the entire page. Other websites are worse - images as buttons, flavour images and hero banners and icons everywhere. Again, a much more accessible version is just presenting them with what they actually want - a cleaner, leaner, more contextual page like the ones we built in the 90s before images loaded instantly.

So part of me wonders if blind people actually enjoy “listening to memes” or if they’d rather skip it and hear a text-based joke or an audio/video joke. I did specifically say I wanted a blind person’s opinion on it.

I think you’ve underestimated the wordcount and I think you don’t get how memes are shared if you think adding 4 minutes to their re-transmission wouldn’t matter. I cars that blind people enjoy the internet but I absolutely do not think “searchability” is a good reason to transcribe “Drake meme but it’s an animal girl. Top panel. Animal girl looking repulsed. The item she is repulsed by is the logo of a Linux package manager called Flatpak…”

I specifically said searchable referring to images in general, not memes. I don’t think people search memes that often.

i also didn’t specify verbosity. Blind people are people, idk why we’re talking like they’re a different species. Whether or not you should alt text an image is directly related to whether or not you consider that image part of the content.

a meme is the content, when someone is looking at a post that’s what they’re looking at and reading. if someone wouldn’t want to read it they can stop reading as soon as they see that it’s a meme. if they want to read it they can keep going.

so no, I don’t think meme transcriptions should be as verbose. so it’s just “animal girl is repulsed by flatpak”. you’re explaining it to people who don’t see as well, not people who were born yesterday.

If an uncaptioned tree falls in the woods without any blind people around…

Its a genuine question: how much enjoyment does someone with a visual impairment get from a meme that’s purely a visual gag? You could go through a lot of work to make a cliff face wheelchair accessible but it will never be the same experience as rock climbing

Blindness is a spectrum! So for example, someone could maybe make out the stick figure shape in a comic but not the speech bubbles. Most blind people can still see things but it greatly helps to have things read out as an aid to quite literally see the whole picture :)

And now I wanna try rock climbing as a wheelchair user just because, lol

Very fair point 👉

your analogy makes no sense, and neither does your argument.

first of all the purpose of making places wheelchair accessible is to make it possible for people in wheelchairs to go there, not to give them “the experience” whatever the fuck that is supposed to be.

like, what do you even know what the wheelchair experience is like, and what makes you think what people in wheelchairs want is the experience of rock climbers, rather than the ability to experience something themselves? have you ever seen an interpreter sign a rap song? what do you think that’s for? to stimulate hearing?

second of all, memes are rarely “purely visual” gags. I don’t even know what makes you even think that. because they’re in an image format? you do realize a woman staring at her boyfriend who’s checking out another woman is in no way a visual gag, right? it’s not about how they look, it’s what they’re doing.

or, to argue on a more basic level, if it’s purely visual why does it have fucking words?

My question simplified: when is the juice worth the squeeze? Most memes have text but lose their humor without the visual aspect.

It’s the difference between a comedian nailing the delivery of a punchline with a perfect impression + cadence + body work and reading a transcript of the bit.

In your rap interpretation example there are both visual and lyrical components to the performance, both of which are significant and individually enjoyable pieces of the art. A better example would be handing sheet music to a deaf person so they could enjoy an orchestra on tv.

On the girlfriend gag: it absolutely is visual. The nuance of the expressions and body language directly increase the effort and skill required to reproduce it.

I could accurately describe it “A man holding hands with woman who looks at him. He looks at a third woman. Captioned […]”. I could spend more time to better convey the humor but the delivery is no longer the meme. It can only ever be as good as my own ability to describe it in a parallel work.

From that logic, would the blind even be enjoying same content anymore? There are images that translate easily and readily to text, and I agree that everyone should be in the habit of trying to do it. But in making it a rock solid rule it kind of loses the spirit of inclusion in favor of dogma.

On a more basic level, if you could easily write the joke in text why is it an image?

alt text is a part of the post quality like any other

I do alt text on my image posts, especially OC content as I find it’s a curb cutting effect. https://en.m.wikipedia.org/wiki/Curb_cut_effect

I’m not the only person in a rural area who has internet access, and sometimes the alt text is enough to convey the joke/image than the image itself.

Plus I find it a fun test of my vocabulary to use the words I need to explain the joke/image. Sometimes I don’t know the right words and I learn a new one.

When the instance hosting the image dies the alt text stays so I can still read the old posts even if image is long gone into enthropy.

I actually didn’t consider that as a bonus. Nice to know that helps future readers of my shitposts.

I usually either write a proper alt-text if it’s a non-joke image, or an xkcd style extra joke if it’s meant to be a meme.

Well, I do on Mastodon. I know exactly where the button for that is on that platform. Gimme a sec to check where it is on Lemmy.

In jerboa on android there’s a dedicated alt-text field above the “body” field

deleted by creator

I’d probably have just left - wasting my time isn’t the answer for a shitty venue.

Of course you would, because you don’t need a paycheck.